Language models (LLMs) have always struggled with memory—imagine trying to have a conversation with someone who forgets what you just said. It’s a bit like the film Memento, where the protagonist relies on notes to piece together his life. If you’ve ever found yourself repeatedly explaining the same details to an AI, you’ll understand the challenge.

Language models (LLMs) have always struggled with memory—imagine trying to have a conversation with someone who forgets what you just said. It’s a bit like the film Memento, where the protagonist relies on notes to piece together his life. If you’ve ever found yourself repeatedly explaining the same details to an AI, you’ll understand the challenge.

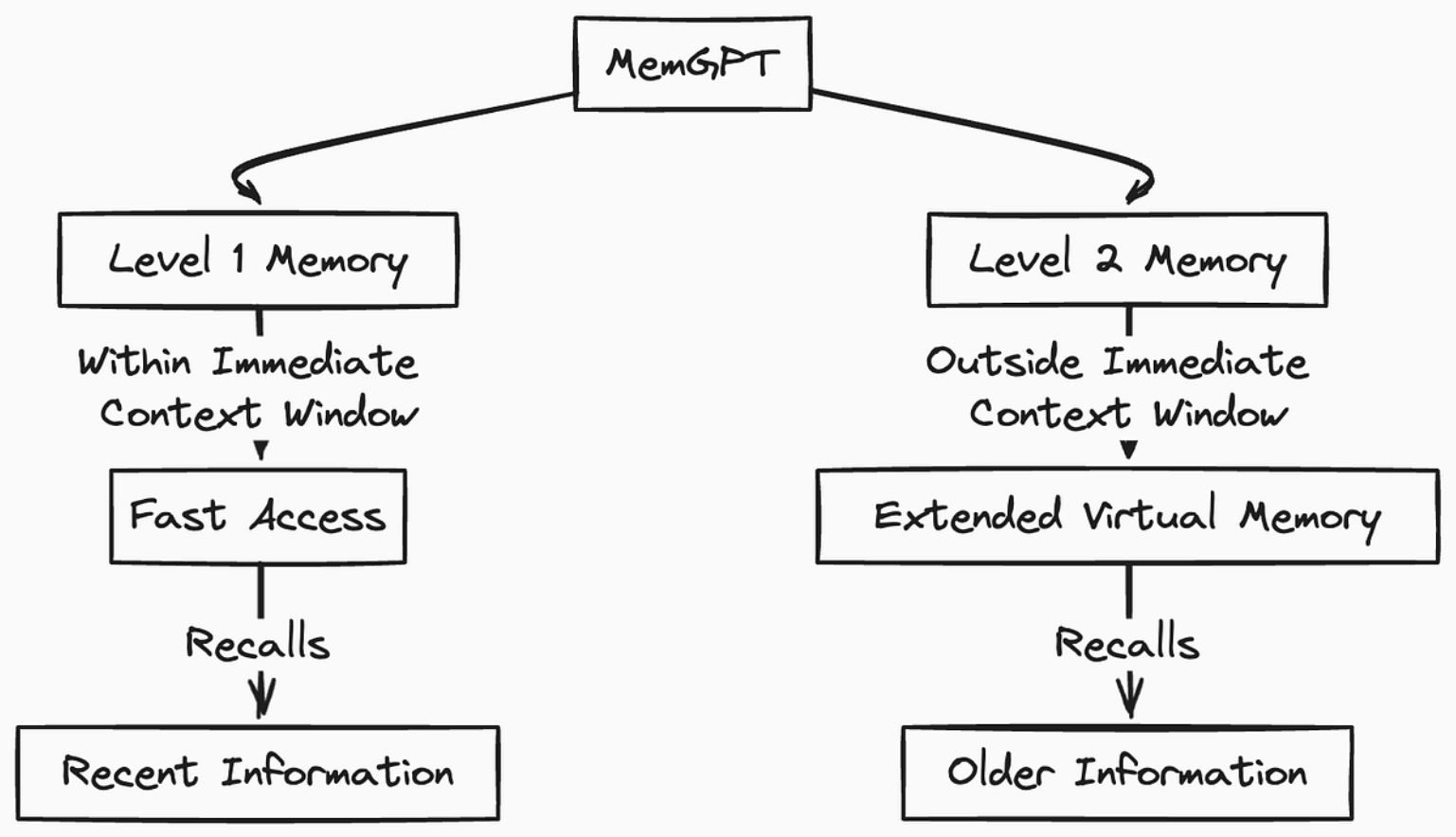

Handling short-term memory is relatively simple: just include recent exchanges in your chat. But when it comes to long-term memory, the approach needs to be more strategic. Rather than dumping all past interactions into the context—which can overwhelm the model—designers are creating systems that selectively store key details, summarise previous dialogues, and intelligently retrieve relevant information.

This isn’t without its hurdles. Storing too much data can lead to what we call data bloat, and outdated information might pop up if the system isn’t continuously refined. That’s why many solutions now include timestamps and self-updating mechanisms to flag or remove stale details—ensuring that the AI’s recall remains both accurate and useful.

To further enhance memory, some teams are incorporating knowledge graphs. These graphs provide a structured layout of information, making it easier for LLMs to map out relationships and perform multi-step reasoning. In contrast, systems that rely solely on unstructured text storage might stumble over conflicting or ambiguous data.

At present, vendor offerings vary. For instance, Zep leverages knowledge graphs, while Mem0 opts for a vector-based approach. Both paths aim to improve how LLMs access and apply historical data. If you’re exploring these innovations, starting with a cloud-based model before moving to self-hosting as your needs grow might be a sensible route.

The pursuit of bringing solid long-term memory to AI is ongoing, but every step forward helps shape systems that understand context better and interact more naturally. By keeping a close eye on current challenges and solutions, you can navigate these emerging technologies with confidence.