ResNeXt is not just another neural network—it’s a smart evolution built on solid predecessors like ResNet, VGG, and Inception. If you’ve ever struggled with tuning your models just right, you’ll appreciate how ResNeXt adds a new twist with its cardinality parameter. This extra dimension lets you balance depth, width, and the number of pathways to give your model more flexibility and power.

ResNeXt is not just another neural network—it’s a smart evolution built on solid predecessors like ResNet, VGG, and Inception. If you’ve ever struggled with tuning your models just right, you’ll appreciate how ResNeXt adds a new twist with its cardinality parameter. This extra dimension lets you balance depth, width, and the number of pathways to give your model more flexibility and power.

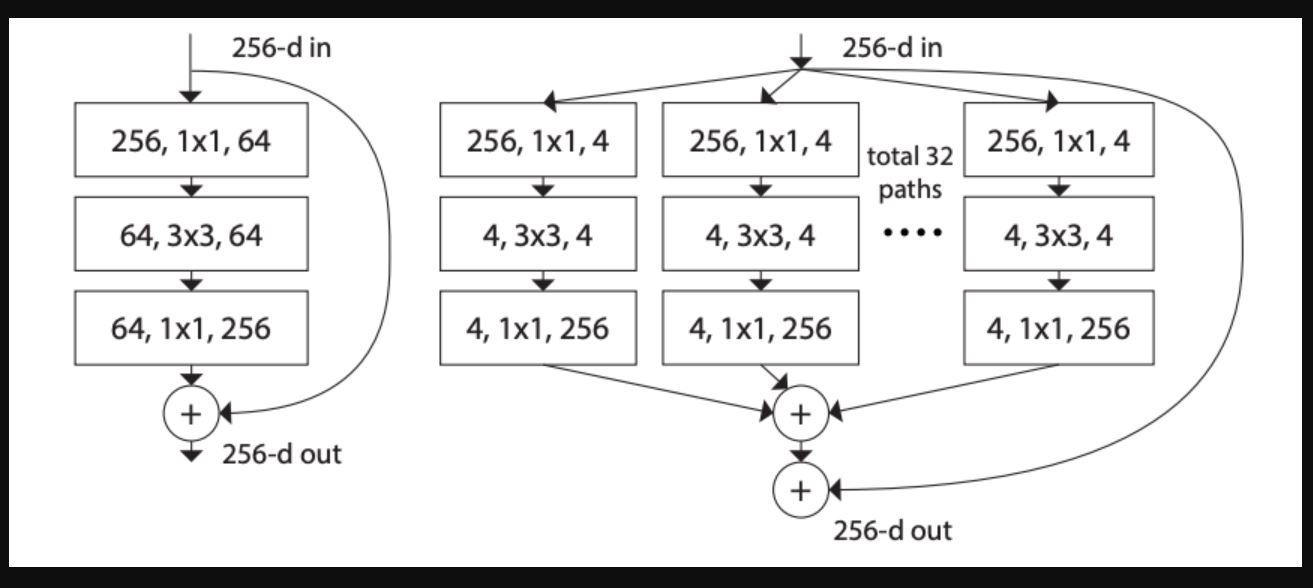

The magic lies in ResNeXt’s use of group convolutions, which split, transform, and merge data more efficiently than ever before. Figure 1 in the original paper outlines three effective module variants, and the go-to is setting the cardinality to 32—this seems to hit the sweet spot between performance and computational demand. It’s a neat trick to get more out of your model without making things overly complicated.

Diving into the math, ResNeXt handles its operations through a split-transform-merge workflow, allowing each path to process the input separately. This modular design isn’t just elegant—it’s practical, letting you tackle a range of tasks with confidence. Imagine stacking these identical blocks, much like in ResNet, but now with improved channel configurations and that extra cardinality flair. Whether you’re exploring a ResNeXt-50 (32x4d) or customising an architecture from scratch, you’re in for a treat when it comes to both efficiency and scalability.

For developers ready to implement ResNeXt, the key steps involve initializing core components, setting up the data flow, and managing channel dimensions and spatial transformations across layers. If you’ve ever wrestled with configuring model parameters, you’ll find that this approach simplifies testing different stages, ensuring you maintain consistency and performance throughout.