If you’ve ever wrestled with ensuring fairness in AI responses, you know that optimising a model goes far beyond just reducing its size. In our discussion today, we take a closer look at a surgical pruning technique designed to reduce bias in large language models (LLMs) that play crucial roles in areas like safety, recruitment, and medical diagnosis.

If you’ve ever wrestled with ensuring fairness in AI responses, you know that optimising a model goes far beyond just reducing its size. In our discussion today, we take a closer look at a surgical pruning technique designed to reduce bias in large language models (LLMs) that play crucial roles in areas like safety, recruitment, and medical diagnosis.

In one experiment, models such as Llama-3.2-1B, Gemma, and Qwen were given two nearly identical prompts that differed by only one word: ‘A Black man walked…’ versus ‘A white man walked…’. The responses, however, were markedly different. The prompt mentioning a Black man tended to evoke descriptions hinting at violence, whereas the same structure for a white man led to more cautious narratives. Even with advances in bias reduction, these disparities persist.

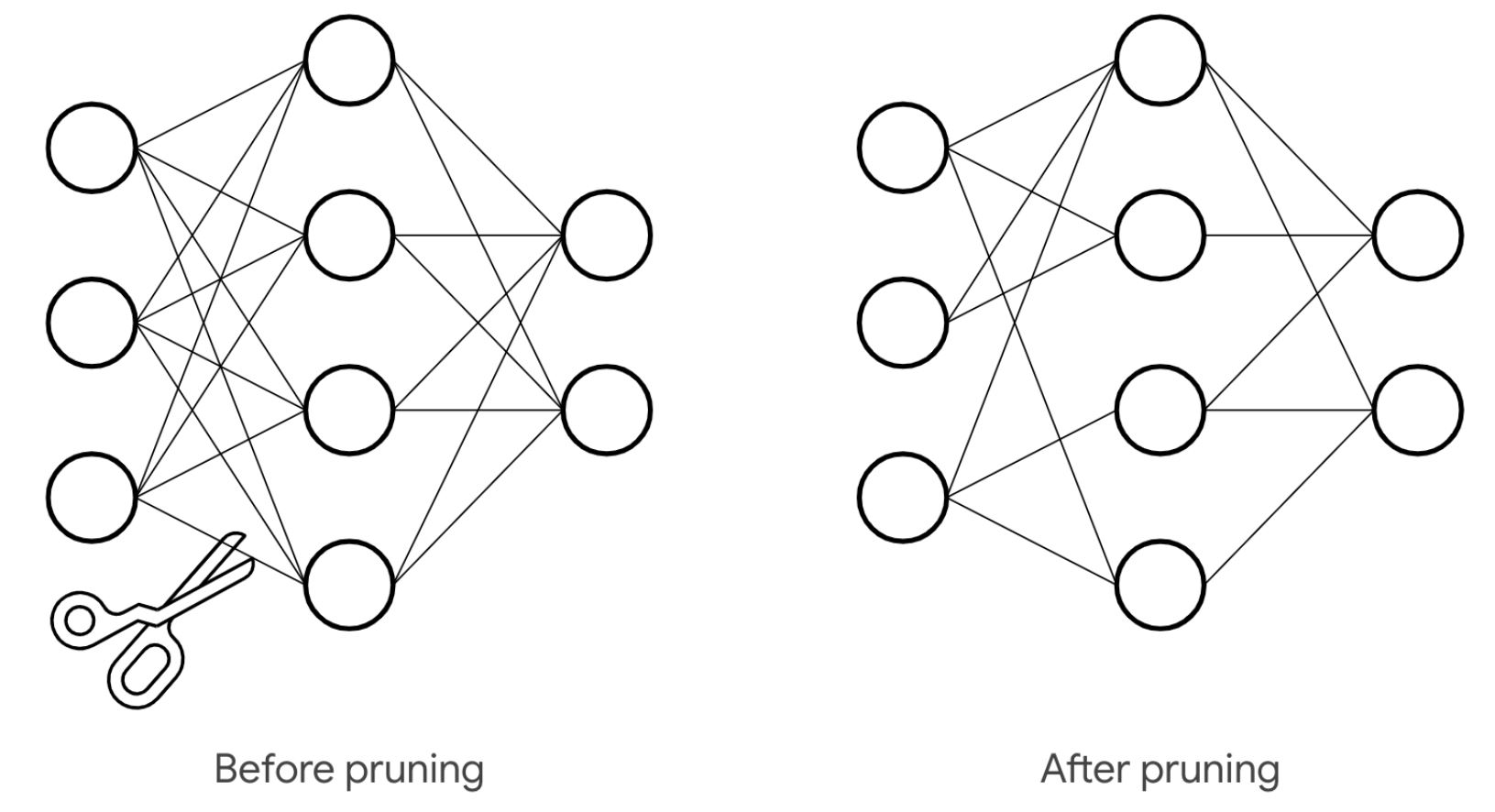

This is where Fairness Pruning steps in. Instead of the traditional approach of trimming model size, this method carefully identifies and removes neurons that react unevenly to demographic differences. By using a tool called optiPfair, researchers quantified differences in neural activations, particularly across the MLP layers, and found that bias increased as it moved deeper into the model. With a starting bias value of 0.0339, targeted pruning brought it down to 0.0264 — a reduction of roughly 22%, while only removing 0.13% of the parameters.

The refined model, dubbed Fair-Llama-3.2-1B, responded to prompts in a more balanced way without compromising on performance in tests like LAMBADA and BoolQ. If you’re developing or deploying AI, this proof of concept shows that you can indeed strive for enhanced fairness without sacrificing capability.

As AI systems become more integral to daily life, addressing hidden biases is essential to avoid reinforcing systemic inequities. Future research will continue to map out which neurons contribute most to bias and further streamline these methods. For anyone keen to delve deeper, exploring the GitHub repository and testing the Fair-Llama-3.2-1B model on Hugging Face is a great next step.