Artificial intelligence is on the brink of transforming our world, but there’s been a bit of a snag. The energy demands and data bottlenecks have slowed down its progress. Enter the team at Columbia Engineering, who are shaking things up with a revolutionary 3D photonic-electronic platform. This innovation is setting new standards in energy efficiency and bandwidth, promising to redefine AI hardware as we know it.

Artificial intelligence is on the brink of transforming our world, but there’s been a bit of a snag. The energy demands and data bottlenecks have slowed down its progress. Enter the team at Columbia Engineering, who are shaking things up with a revolutionary 3D photonic-electronic platform. This innovation is setting new standards in energy efficiency and bandwidth, promising to redefine AI hardware as we know it.

Under the leadership of Keren Bergman, a professor of electrical engineering, this research—published in Nature Photonics—is nothing short of groundbreaking. By blending photonics with advanced CMOS electronics, the team is tackling the persistent challenges of data movement head-on. This is a big deal because data movement is crucial for making AI technologies faster and more efficient.

As Bergman puts it, ‘We’ve developed a technology that can transfer massive amounts of data with incredibly low energy consumption.’ This breakthrough shatters the energy barriers that have limited data movement in traditional computing and AI systems for so long.

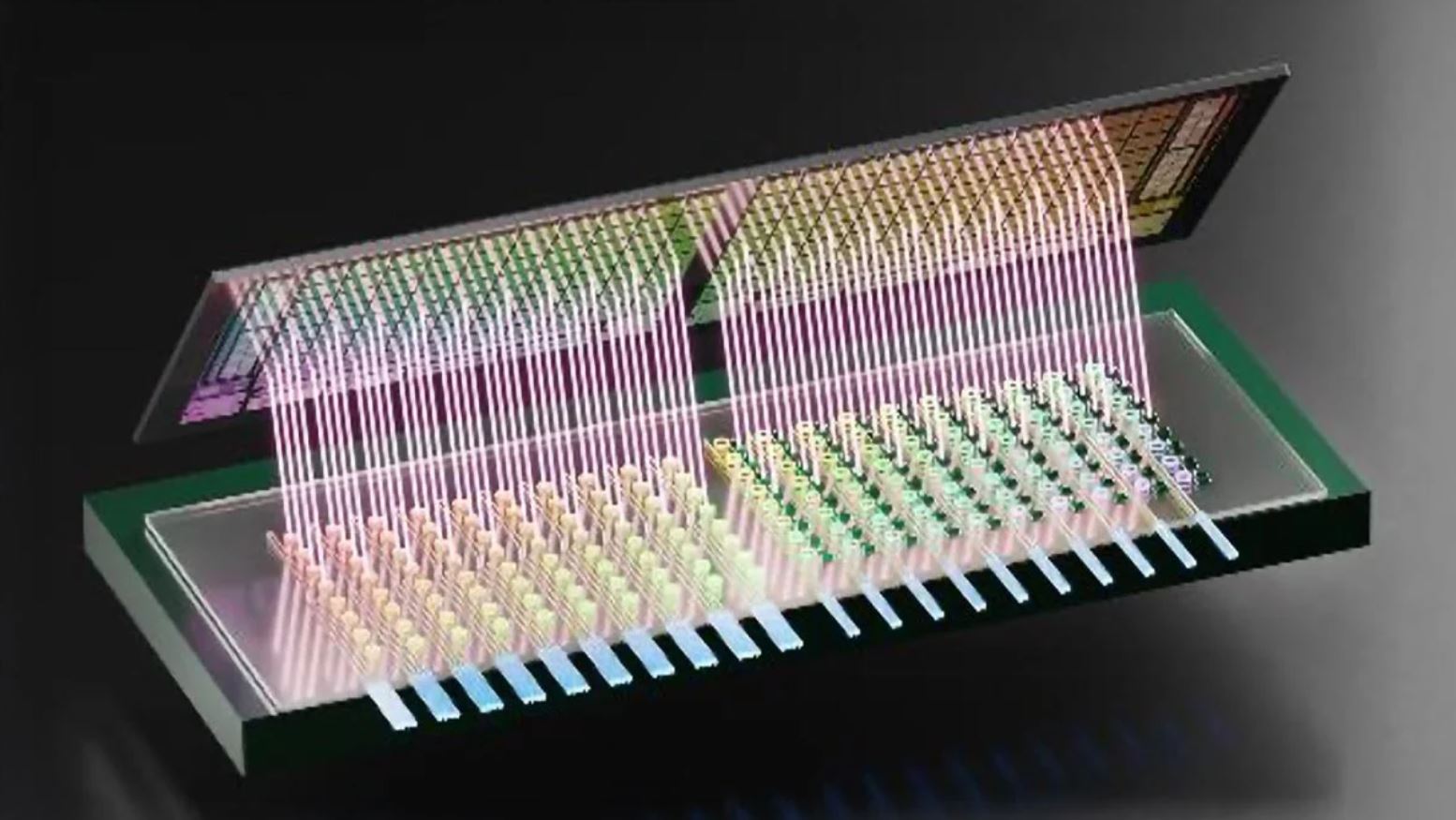

Working alongside Alyosha Christopher Molnar from Cornell University, the team has crafted a 3D-integrated photonic-electronic chip. This chip is impressive, packing 80 photonic transmitters and receivers into a small space. It delivers a whopping 800 Gb/s bandwidth while using just 120 femtojoules per bit. With a bandwidth density of 5.3 Tb/s/mm², it’s setting new benchmarks in the field.

What’s particularly exciting is that this chip is designed to be affordable. By integrating photonic devices with CMOS electronic circuits using components available in commercial foundries, it’s poised for widespread industry adoption.

This research is changing the game for data transmission between compute nodes. By 3D integrating photonic and electronic chips, the technology achieves remarkable energy savings and bandwidth density, overcoming the traditional constraints of data locality. This means AI systems can now manage large data volumes efficiently, supporting distributed architectures that were previously held back by energy and latency issues.

The potential here is huge. This technology could unlock unprecedented performance levels, becoming a cornerstone for future computing systems. It’s not just about AI models; it could revolutionize real-time data processing in autonomous systems, high-performance computing, telecommunications, and even disaggregated memory systems. We’re talking about a whole new era of energy-efficient, high-speed computing infrastructure.

This research was a collaborative effort, with contributions from Cornell University, the Air Force Research Laboratory, and Dartmouth College. It’s a testament to what can be achieved when brilliant minds come together to solve complex problems.