Artificial intelligence (AI) is reshaping the world as we know it, offering transformative possibilities in fields like healthcare and customer service. But as we push the boundaries of what’s possible, it’s vital to have thoughtful policies in place to balance the incredible benefits with the risks that come along for the ride.

Artificial intelligence (AI) is reshaping the world as we know it, offering transformative possibilities in fields like healthcare and customer service. But as we push the boundaries of what’s possible, it’s vital to have thoughtful policies in place to balance the incredible benefits with the risks that come along for the ride.

One major issue we can’t ignore is algorithmic bias. It happens when AI systems, often powered by machine learning, deliver skewed results because they’re trained on incomplete or unrepresentative data. This isn’t just a tech problem; it can actually worsen inequality and leave entire communities on the sidelines.

At the University of Cambridge, along with our colleagues at Warwick Business School, we’ve come up with what we call a ‘relational risk perspective’ to address these challenges. This approach doesn’t just look at how AI is used today but also anticipates future applications across different parts of the world. Our goal is to safeguard AI’s benefits for everyone while minimizing potential harm.

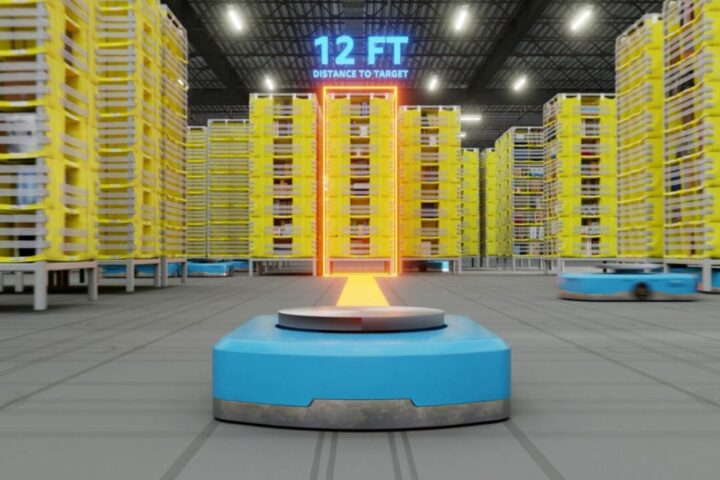

Let’s talk about the workplace. AI is already making waves, changing how both routine and creative tasks are done. Companies are leaning more on AI, which raises a real concern: the potential erosion of human expertise and critical thinking. If people start relying too much on machine-made decisions, it could alter not just how tasks are completed but also how people interact and work together.

Take recruitment, for example. If the data sets AI relies on aren’t diverse enough, we risk perpetuating inequalities in hiring and promotions. Plus, there’s an often overlooked aspect of the AI industry: it relies heavily on ‘invisible’ labor from the Global South to clean up data and fine-tune algorithms for users primarily in the Global North. This ‘data colonialism’ highlights global inequalities, leaving those workers out of the loop when it comes to reaping the benefits of their efforts.

Healthcare data is another area where bias can creep in. It’s crucial to ensure that the health data AI analyzes is representative of diverse populations. Otherwise, we risk creating policies that only widen existing disparities. With AI technology becoming a bigger part of our lives, we need to act quickly to harness its potential responsibly. Generative AI is developing at such a pace that ethical and regulatory frameworks are struggling to keep up.

Our relational risk perspective sees AI as neither inherently good nor bad. Whether it helps or harms depends on how it’s developed and used in different social contexts. As technology evolves, so do the risks, shaped by how people interact with it and the societal structures in place. Policymakers and technologists need to proactively consider how AI might reinforce or challenge existing inequalities, keeping in mind that AI’s maturity level can vary around the globe.

To create inclusive and effective AI risk policies, we need a multidisciplinary approach that brings together diverse stakeholders. This shows the public that AI governance is taking everyone’s interests into account.