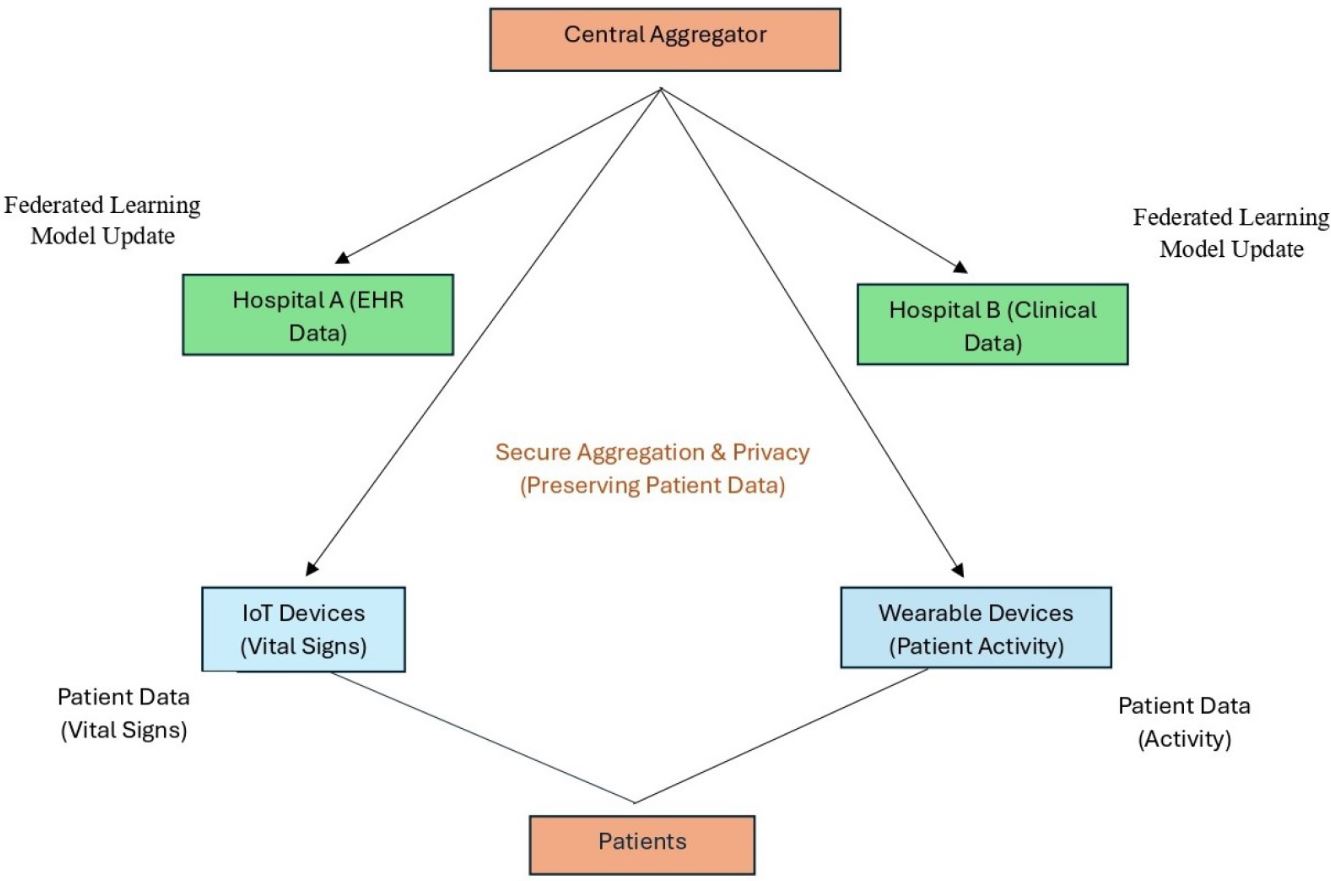

In today’s fast-paced world of healthcare innovation, federated learning is making waves. But with these advancements comes the crucial task of protecting the algorithms driving them. As biotech companies push the boundaries of AI and machine learning, they face the dual challenge of safeguarding patient data and securing their proprietary algorithms. This is especially true for tasks like brain lesion segmentation, which are carried out directly at healthcare facilities using patient data without compromising security.

In today’s fast-paced world of healthcare innovation, federated learning is making waves. But with these advancements comes the crucial task of protecting the algorithms driving them. As biotech companies push the boundaries of AI and machine learning, they face the dual challenge of safeguarding patient data and securing their proprietary algorithms. This is especially true for tasks like brain lesion segmentation, which are carried out directly at healthcare facilities using patient data without compromising security.

Federated learning offers a way forward by ensuring data remains on-site and is processed securely. However, when these algorithms are considered corporate assets, the need for robust protection becomes even more pressing. Let’s dive into some strategies to shield these algorithms from intellectual property theft and unauthorized access, particularly within hospitals.

Securing Algorithm Code

Algorithms are often deployed in docker-compatible containers, running independently with various libraries. The real challenge is securing these environments from third-party IT administrators who might exploit them. A multi-layered approach is essential for effective protection.

Protecting algorithm code is critical to prevent unauthorized access or reverse engineering. Evaluating the runtime environment is crucial to mitigate risks of administrators accessing confidential data within containers. Infrastructure safeguards are also necessary to prevent unauthorized administrator access.

Exploring Confidential Containers (CoCo)

Confidential Containers (CoCo) technology is emerging as a promising solution, offering confidential runtime environments that protect both the algorithm code and the data. CoCo supports multiple Trusted Execution Environments (TEEs), using hardware-backed protection during execution. However, CoCo still has some security gaps that skilled administrators might exploit.

Other Protection Strategies

Host-based container image encryption can protect algorithm code at rest and in transit, but it doesn’t secure the algorithm during runtime, leaving it vulnerable to decryption by administrators with access to the keys.

Prebaked custom virtual machines offer another option, delivering encrypted VMs that require external key input at boot time, enhancing algorithm protection. Yet, this method also has vulnerabilities, especially to hypervisor-level attacks post-boot.

Distroless container images reduce the attack surface but don’t protect the algorithm code. Meanwhile, transitioning algorithms from interpreted languages like Python to compiled languages such as C++ or Rust can make reverse engineering significantly harder, though it introduces development complexity.

Code obfuscation can make reverse engineering more challenging, but it’s not foolproof due to advances in de-obfuscation technology. While homomorphic encryption shows promise for data protection, it doesn’t shield algorithms.

A Combined Approach

Ultimately, no single solution offers complete protection against determined adversaries. A combination of strategies, including hardware isolation and legal frameworks, creates substantial barriers to intellectual property theft. The evolution of AI in healthcare depends on these multi-faceted security measures.

Significant efforts from hardware and software vendors to address these challenges continue. With ongoing advancements, training IP-protected algorithms in federated settings without compromising patient data privacy is on the horizon. However, these solutions must adapt to the diverse infrastructure of healthcare settings to be truly effective.

The community’s feedback and collaboration are vital in developing sustainable, secure methodologies for AI deployment, ensuring robust security and compliance. Together, we can tackle these challenges, paving the way for groundbreaking progress in healthcare technologies.