Imagine your computer not just processing data, but remembering and adapting like a trusted friend. Titans is a fresh take on AI architecture that transforms how large language models manage memory, offering a more natural and responsive approach.

Imagine your computer not just processing data, but remembering and adapting like a trusted friend. Titans is a fresh take on AI architecture that transforms how large language models manage memory, offering a more natural and responsive approach.

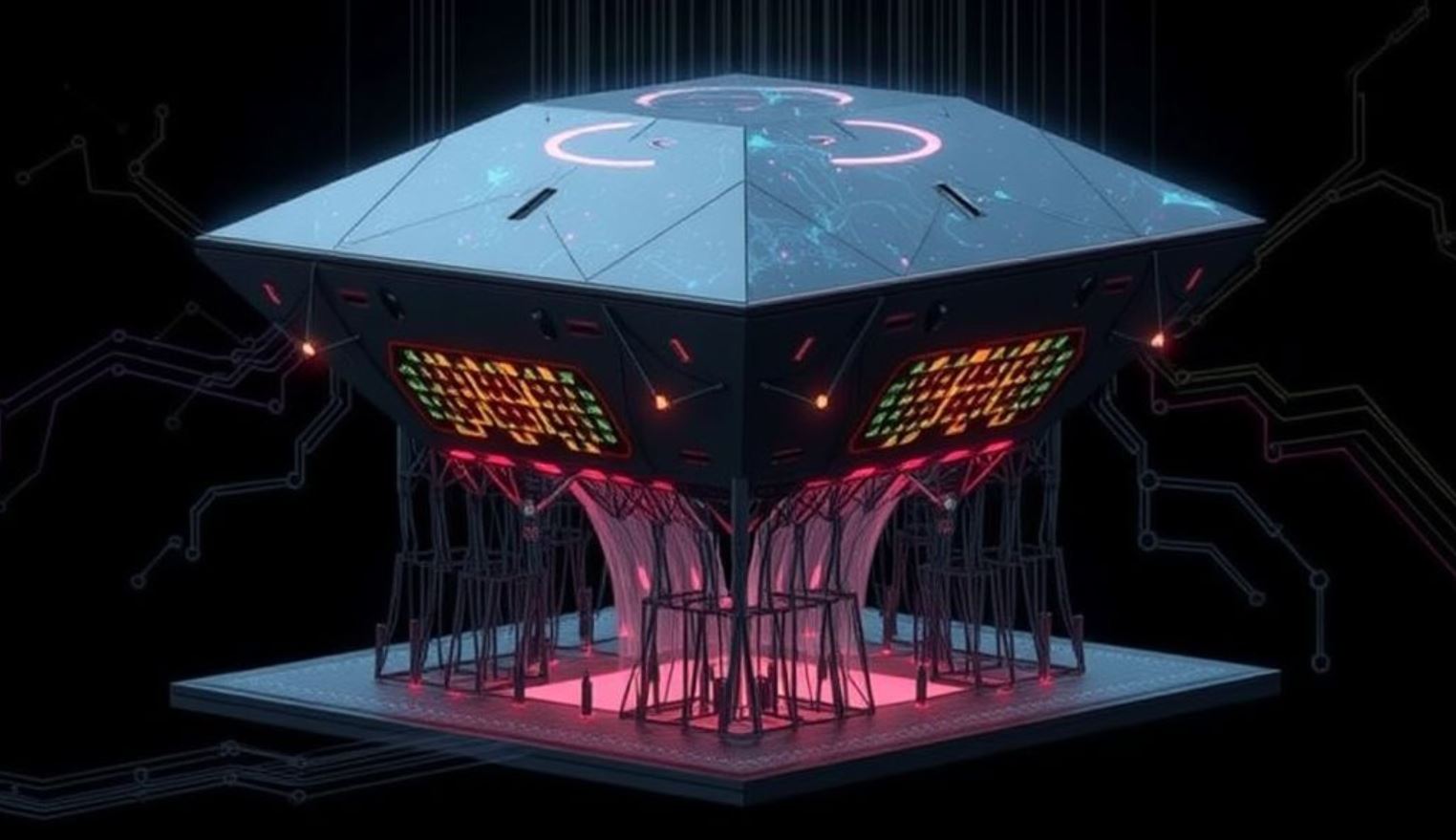

Where traditional models such as transformers shine in understanding language yet struggle to recall details from lengthy conversations or big datasets, Titans introduces a human-inspired memory system. It blends three key components – Short-Term Memory (STM) for immediate details, a Long-Term Memory Module (LMM) that learns as it goes, and Persistent Memory (PM) to hold essential context – to create a smoother, more intuitive AI experience.

The design is flexible too. Titans can be configured in several ways, including Memory as a Context (MAC), Memory as a Gate (MAG), and Memory as a Layer (MAL). These modes let the system either extend the context for better understanding or mix information dynamically, adapting to the task at hand.

This approach not only boosts the model’s ability to handle complex reasoning and deep contextual tasks but also sees it outperforming existing models like GPT‑4 and Llama 3.1 in benchmarks such as BABILong. Beyond language tasks, Titans shows promise in diverse fields like time series forecasting and DNA modelling.

If you’ve ever wrestled with an AI that just couldn’t keep up with a long conversation, you’ll appreciate how Titans brings a human touch to machine memory. By merging immediate recall with evolving knowledge, it offers a more reliable and adaptable performance – a step towards AI that truly understands context.