Hey there! If you’ve been working with graph data, you know how crucial Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs) have been. But let’s face it, they can hit a wall when it comes to speed and handling large, ever-changing graphs. That’s where GraphSAGE steps in as a game-changer.

Hey there! If you’ve been working with graph data, you know how crucial Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs) have been. But let’s face it, they can hit a wall when it comes to speed and handling large, ever-changing graphs. That’s where GraphSAGE steps in as a game-changer.

Traditional GCNs and GATs have their strengths, but they often struggle with scaling and adapting to new structures. They’re not the best at inductive learning, which is all about generalizing to unseen nodes or graphs. Plus, when you’re dealing with massive graphs, the computational load can be overwhelming. GCNs require exponential neighbor aggregation, and GATs involve complex multi-head attention, which can be quite taxing.

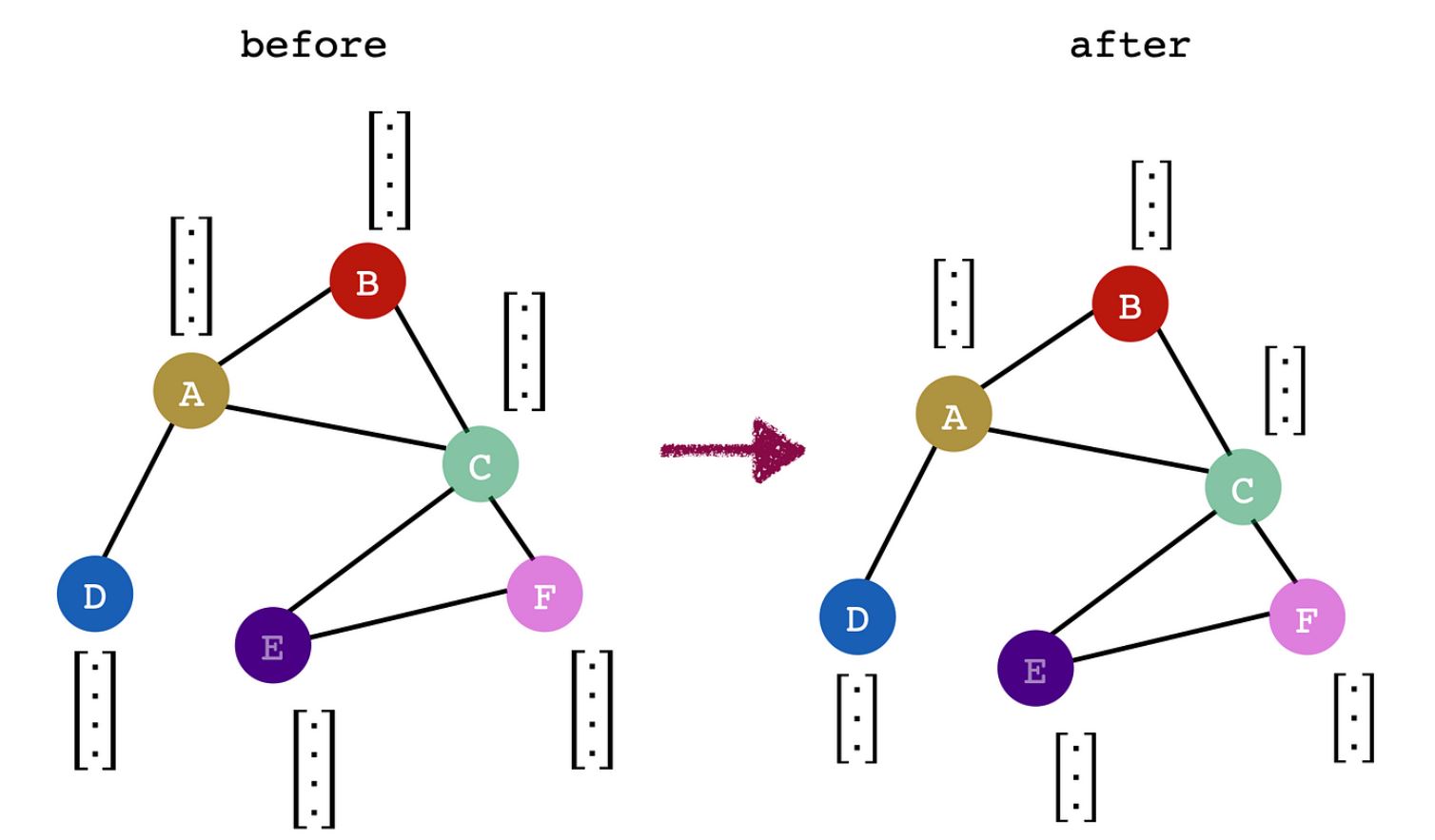

Enter GraphSAGE, short for SAmple and aggreGatE. It’s designed to handle these challenges by sampling a subset of neighbors instead of processing them all. This makes it much more feasible to work with large graphs. It uses different aggregation methods like mean, LSTM, and pooling to effectively recognize patterns and generalize.

GraphSAGE works by sampling fixed-size neighbor subsets, which helps avoid those annoying disconnection issues. It then aggregates their features using methods like mean, LSTM, or pooling. This approach allows it to capture complex relationships while cutting down on noise.

One of GraphSAGE’s key strengths is its ability to integrate these aggregated features with a node’s own features. This means it maintains the node’s identity while still learning from its neighbors, enhancing the flow of information across the graph. By repeating this process through multiple layers, it can incorporate data from even the most distant nodes.

GraphSAGE is efficiently implemented in PyTorch Geometric (PyG), which supports mini-batch training. This is a big plus over GCNs and GATs that require processing the full graph, making GraphSAGE much more scalable.

Even on smaller datasets like Cora, GraphSAGE has shown superior accuracy compared to GCNs and GATs. And when tested on larger datasets like CiteSeer and PubMed, its performance shines even brighter.

GraphSAGE isn’t just about supporting inductive learning; it’s also about adapting to changing graph structures effectively. While GCNs are still valuable benchmarks, GraphSAGE’s use of neighbor sampling and aggregation functions gives it a clear edge in managing large, dynamic graphs.