Scientists at Brookhaven National Laboratory are reshaping research with VISION—a voice-controlled AI assistant that bridges knowledge gaps and streamlines experiments. By letting you talk to complex instruments in everyday language, VISION makes it easier to manage experiments and analyse data.

Scientists at Brookhaven National Laboratory are reshaping research with VISION—a voice-controlled AI assistant that bridges knowledge gaps and streamlines experiments. By letting you talk to complex instruments in everyday language, VISION makes it easier to manage experiments and analyse data.

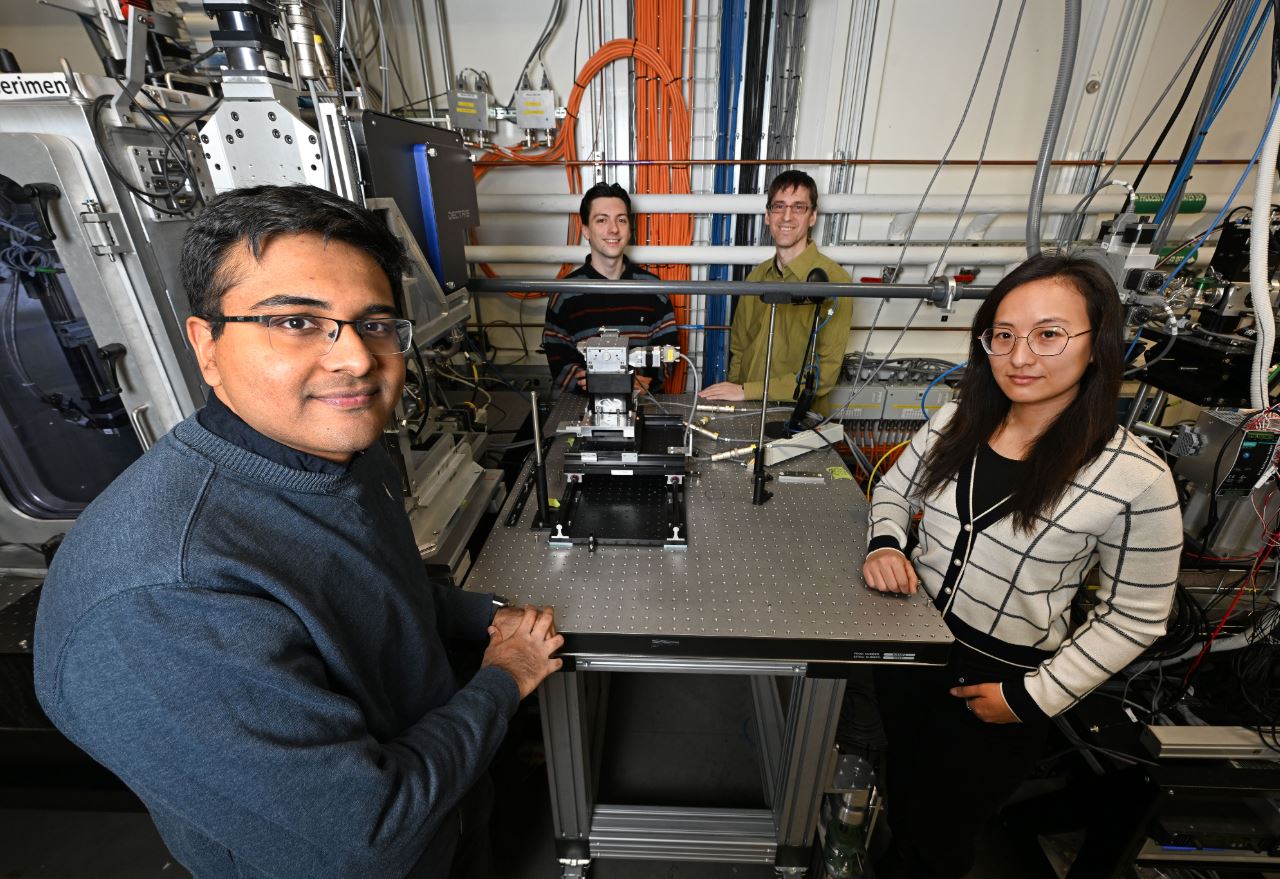

Developed at the Center for Functional Nanomaterials with input from the National Synchrotron Light Source II, the tool utilises large language models (LLMs) to interpret both spoken and written commands, converting them into actionable code. This approach means researchers can bypass tedious software setups and focus on what really matters: their science.

As Esther Tsai from the AI-Accelerated Nanoscience group at CFN puts it, “I’m really excited about how AI can impact science. We spend far too much time on routine tasks, and VISION steps in to answer basic questions about instrument capability and operation.”

The system’s architecture is built around modular “cognitive blocks”—or cogs—each driven by a dedicated LLM that handles tasks from instrument control to data analysis. This design not only brings flexibility but also allows the system to integrate new AI models as they emerge, as explained by Shray Mathur, who heads up the audio-understanding part of the project.

The collaboration between CFN and NSLS-II underlines the project’s practical potential. Tested at Brookhaven’s CMS beamline, VISION already demonstrates how a more natural interface between researchers and sophisticated instruments can boost experimental workflows.

Looking forward, the team plans to extend VISION’s capabilities to additional beamlines, gathering feedback to continuously refine the system. In doing so, they hope to create an interconnected network of AI assistants that collectively allows scientific discovery to flourish.