If you’ve ever wrestled with an LLM that loses track of details even after being fed a massive chunk of text, you’re not alone. Recent breakthroughs mean these models can now process hundreds of pages at once, but that doesn’t necessarily translate to flawless memory.

If you’ve ever wrestled with an LLM that loses track of details even after being fed a massive chunk of text, you’re not alone. Recent breakthroughs mean these models can now process hundreds of pages at once, but that doesn’t necessarily translate to flawless memory.

New research suggests that as the complexity of a task grows, an LLM’s working memory can become overwhelmed long before it hits its token limit. A new theoretical model backs this up, and experiments show that models can start to miss crucial details—whether that’s spotting a plot twist in a lengthy narrative, or accurately answering questions based on similar document contexts.

Consider the challenge of debugging code. When tracking several variable assignments, an LLM may be forced into making random guesses once the number of variables outstrips its limited working memory—often between five and ten items. In situations like these, even unlimited context windows won’t save the day.

The study breaks down tasks using the BAPO model, which categorises problems based on their memory demands. Some tasks, like graph reachability or reasoning over sets of relationships, are deemed memory-intensive (BAPO-hard) while simpler tasks such as finding the minimum or maximum value are less so (BAPO-easy). If your task isn’t on the list, it might be worth considering a straightforward solution that sidesteps heavy memory use altogether.

For tasks that push the memory envelope, several strategies can help. You might use reasoning-enabled models, break problems into bite-sized pieces, pre-annotate data, or even outsource some of the heavy lifting to external tools. While these approaches are promising, they also highlight that memory challenges in LLMs remain an open field for future innovation.

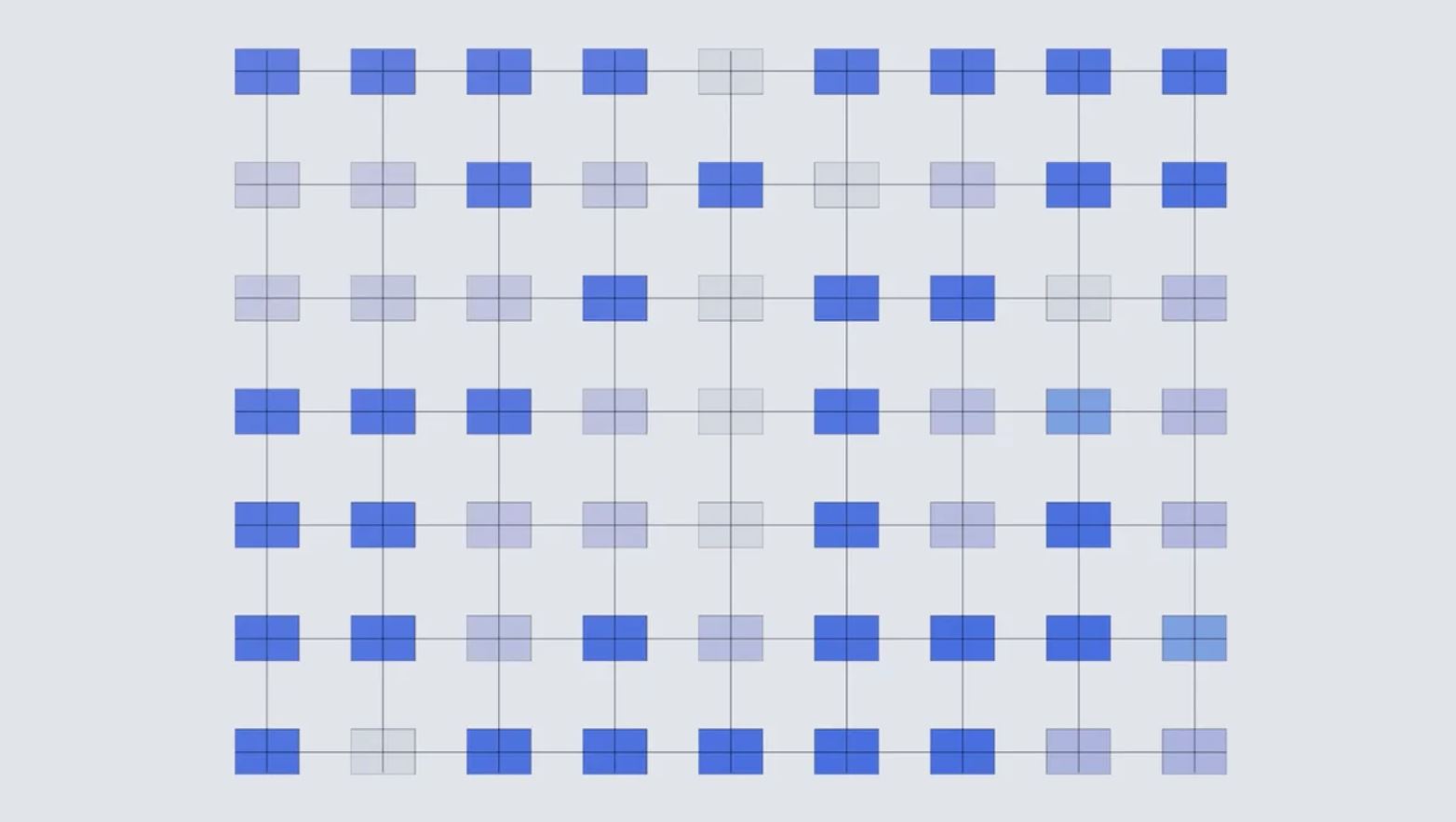

The researchers also developed a theoretical framework to model how transformers—the technology powering these LLMs—handle memory. When posed with questions about long texts, models either try to recall every bit of crucial information or perform targeted lookups. However, due to the inherent limitations in how transformers process text, they can’t simply re-read earlier parts to refresh their memory.

This framework uses two key parameters to balance what’s pre-computed and what’s retrieved on demand. If the memory load grows with the task size, the LLM can quickly hit its limits, regardless of how long the context window might be.

If you’ve been frustrated by LLMs stumbling on tasks that involve deep, context-rich reasoning, this research offers both a diagnosis and some potential remedies. Keeping these working memory limits in mind can be a big help in designing more robust and effective applications.