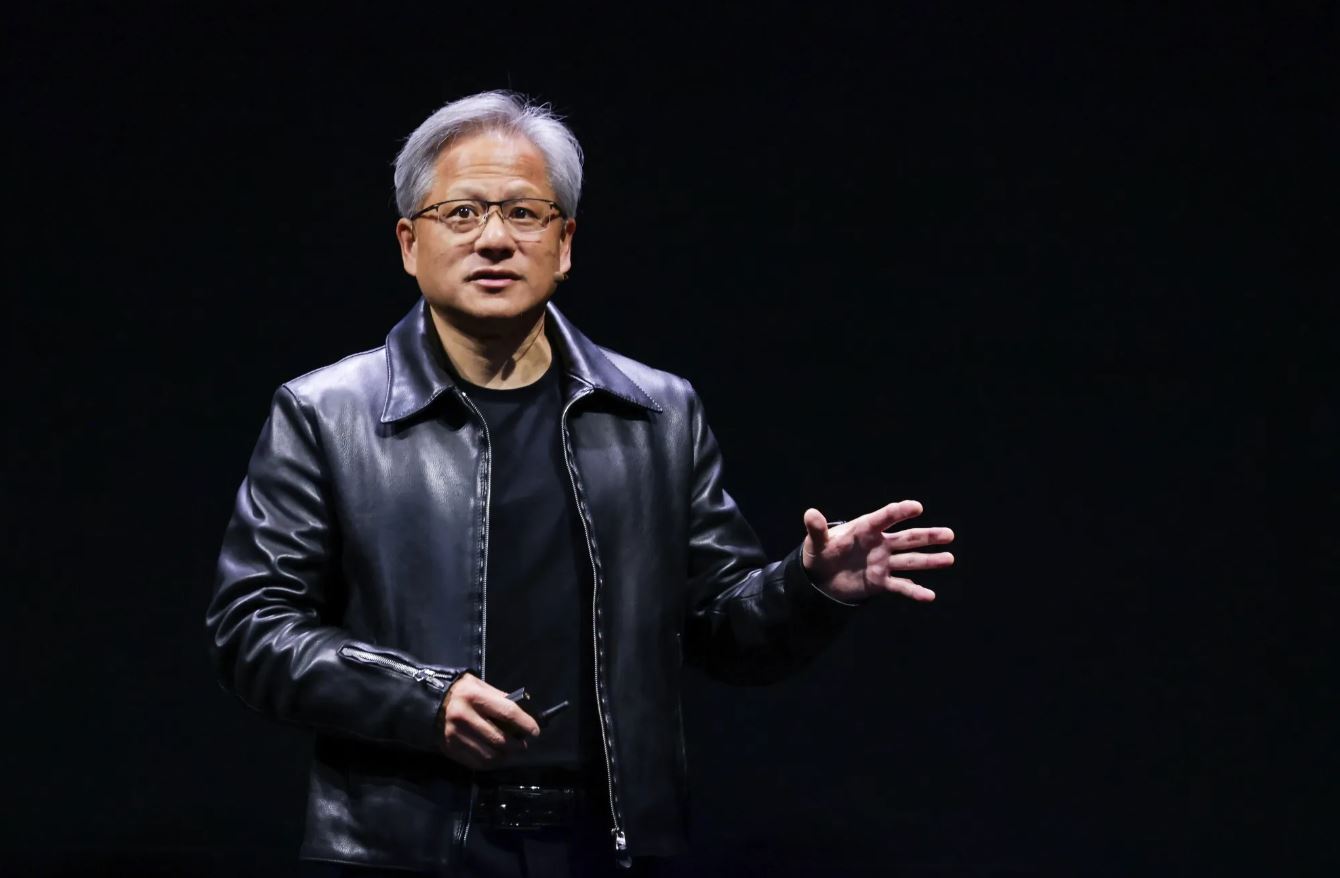

Nvidia’s CEO, Jensen Huang, has a fascinating vision for the future of business, one where every company operates as an ‘AI factory.’ This idea is at the heart of how he sees modern businesses evolving. During a keynote at Nvidia’s recent AI conference, Huang shared how companies will transform into entities that generate ‘tokens,’ which are essential in AI processing. This shift is set to revolutionize business operations, turning the concept of an ‘AI factory’ into a pivotal development.

Nvidia’s CEO, Jensen Huang, has a fascinating vision for the future of business, one where every company operates as an ‘AI factory.’ This idea is at the heart of how he sees modern businesses evolving. During a keynote at Nvidia’s recent AI conference, Huang shared how companies will transform into entities that generate ‘tokens,’ which are essential in AI processing. This shift is set to revolutionize business operations, turning the concept of an ‘AI factory’ into a pivotal development.

Tokens, as Huang explains, are numerical symbols that AI uses to interpret data, breaking complex information into manageable parts. For example, the word ‘darkness’ might be translated into specific numbers representing its components, allowing AI systems to efficiently recognize and connect different concepts. This token generation is crucial because AI models depend on them for training and optimization.

Huang and other tech experts believe that the ability to produce these tokens will define successful companies. Essentially, these companies will become production hubs for AI, creating tokens that boost AI functionalities across various industries. ‘I call them AI factories,’ Huang stated, highlighting their unique role in generating tokens for diverse applications like music, video, and even scientific research.

He illustrated this with examples from the automotive industry, where traditional car factories might have ‘AI factories’ alongside them, dedicated to developing autonomous driving technologies. Nvidia’s partnership with General Motors is a prime example, as they collaborate to integrate AI into manufacturing and vehicle automation.

A practical example of this concept is Tesla’s approach to data collection. As Tesla vehicles gather extensive data through their sensors, it gets converted into tokens that refine their autonomous driving systems. This showcases a data-driven strategy that outperforms conventional isolated development methods.

Jason Liu, an AI consultant, argues that in an AI-driven world, a company’s main function will revolve around data generation. He contrasts Tesla’s expansive data collection strategy with Waymo’s more limited approach, underscoring the importance of extensive data in creating effective AI solutions.

The tokenization process also extends to decision-making within companies. Liu suggests that past business decisions, documented in communications and meetings, can be tokenized to train AI systems for future strategic planning, enhancing decision-making efficiency.

Guillermo Rauch from Vercel explains how their tool, v0, uses user input to produce applications, effectively generating tokens for AI use. Similarly, OpenEvidence employs AI to distill complex medical research into usable information for healthcare professionals, demonstrating the versatility of token-based AI systems.

In conclusion, the concept of AI factories is reshaping business paradigms, with companies like Mercor leveraging expert knowledge to enhance AI capabilities. As businesses gather knowledge and practices, these will feed into AI systems, transforming them into sophisticated, token-generating entities. This shift not only redefines business operations but also heralds a new era of AI-driven innovation.