If you’ve ever wrestled with intricate data pipelines, you’re not alone. Tools like dbt have streamlined the process of building SQL data pipelines, yet even the best‑defined models can knot up quickly, leaving you with a headache when trying to debug changes.

If you’ve ever wrestled with intricate data pipelines, you’re not alone. Tools like dbt have streamlined the process of building SQL data pipelines, yet even the best‑defined models can knot up quickly, leaving you with a headache when trying to debug changes.

Traditional code reviews tend to focus solely on the code itself, often overlooking how those changes ripple through your data. If you’ve ever tried to trace a change in a complex Directed Acyclic Graph (DAG) with nested dependencies, you know it can feel nearly impossible to pinpoint which parts of your data are affected. For example, GitLab’s dbt DAG illustrates how even a modest SQL tweak can have widespread effects across a large data ecosystem.

Data validation is all about ensuring your SQL logic stays in tune with real‑world needs. Whether you’re updating models to meet new requirements or refactoring for efficiency, you need to check that your changes don’t inadvertently distort your metrics. Relying on a quick spot-check, like a simple row count or schema review, might miss the bigger picture, yet going through every downstream model in detail is both resource‑intensive and costly.

The key is to adopt a more precise, change‑aware approach. By mapping out the relationships between your models—be it model‑to‑model, column‑to‑column, or model‑to‑column—you can zero in on the areas that matter most. This means you can classify SQL changes as non‑breaking, partially breaking, or fully breaking. For instance, adding a new column won’t disrupt existing data; renaming a column might only affect models that reference it, while filtering data could send shockwaves across your entire system.

Taking a closer look at column‑level lineage can further narrow the focus, reducing what might be an overwhelming series of checks to just a handful of key models. This method not only enhances precision but also helps you work smarter—targeting your validation efforts where they have the most impact and trimming unnecessary workload.

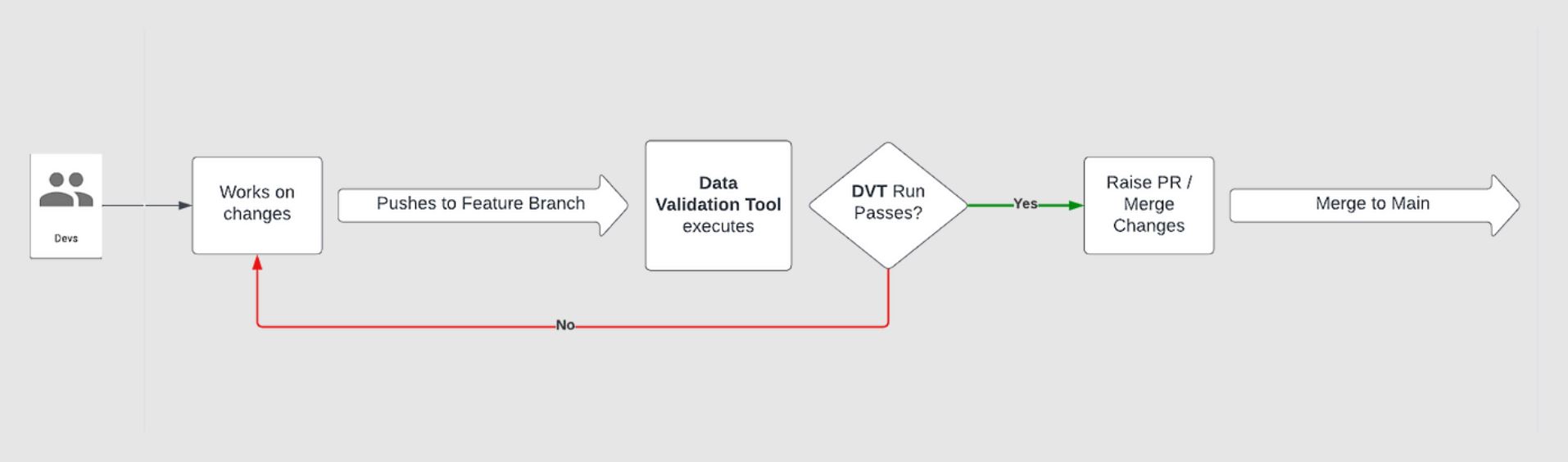

By embracing a systematic, change‑aware validation process, you keep your data’s integrity intact while simplifying reviews and minimising costs.