Prescriptive modelling isn’t just about crunching numbers or forecasting outcomes—it’s about recommending actions to shape the future. But here’s something you might not realise: every time you shift from predicting to prescribing, you’re essentially making a bet on causality.

Prescriptive modelling isn’t just about crunching numbers or forecasting outcomes—it’s about recommending actions to shape the future. But here’s something you might not realise: every time you shift from predicting to prescribing, you’re essentially making a bet on causality.

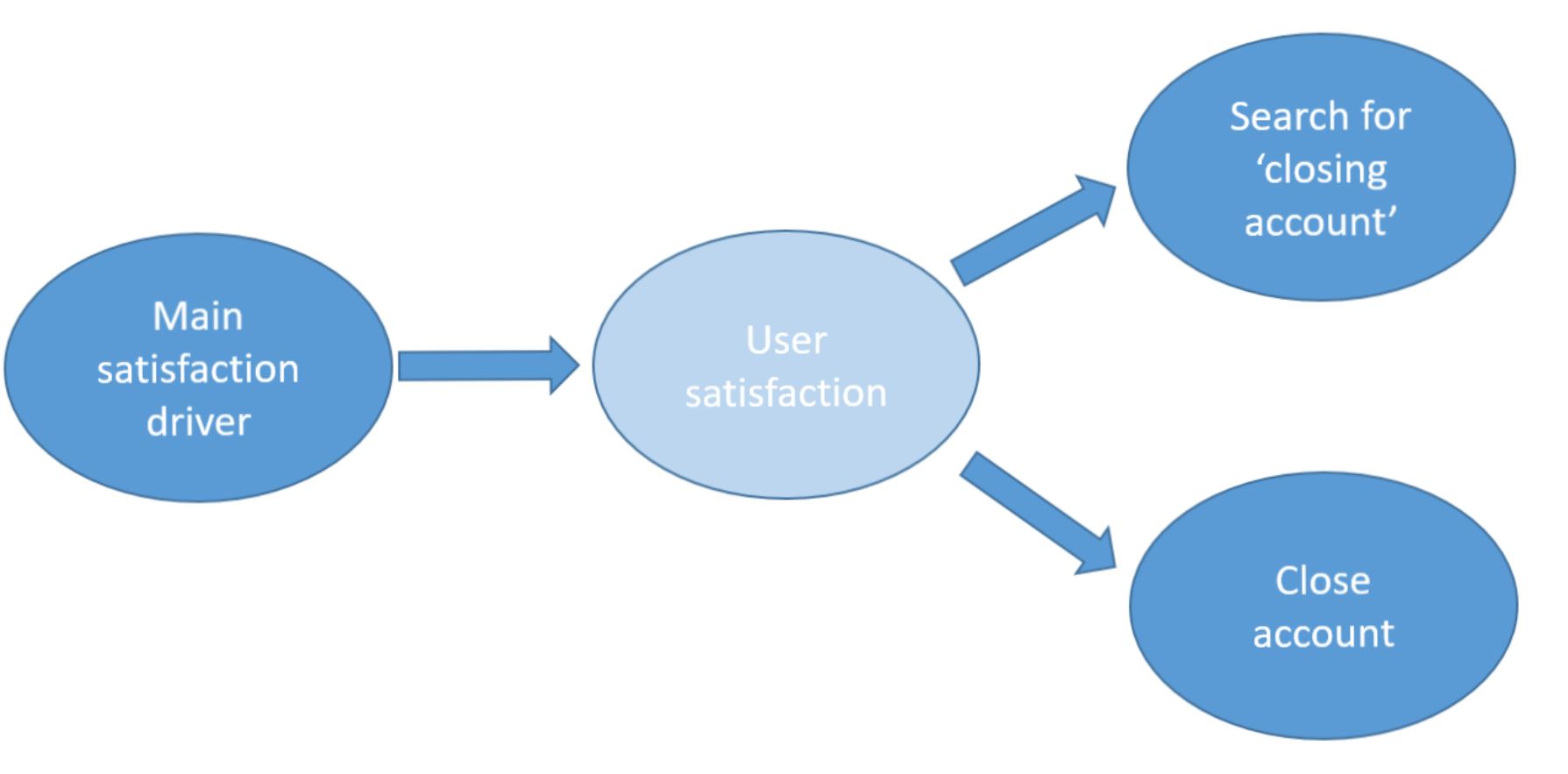

If you’ve ever wrestled with turning insights into action, you know the challenge. Many practitioners notice the data trends in descriptive or predictive analytics, yet they might overlook that prescriptive techniques lean heavily on assumed cause-and-effect relationships. A quick online search for “prescriptive analytics” will reveal plenty of discussion on the mechanics of forecasting but very little about the crucial causal assumptions underneath.

Imagine you’re tasked with reducing customer churn. You build a predictive model highlighting key factors, maybe even testing different discount scenarios. But when you start advising on the best course of action, you’re trusting that your model truly captures the right cause-and-effect links—much like moving a rubber duck around in a pool in the hope it clears the leaves (an amusing notion, but hardly practical).

Consider a pitch on a show like Shark Tank where a claim is made that kids with savings accounts are more likely to head to college. Without considering other elements such as family income or background, such a prescriptive recommendation oversimplifies a much more tangled reality. Similarly, initiatives like the Reading is Fundamental programme assumed that simply distributing more books would automatically boost literacy, missing the deeper, underlying causal factors.

The type of data you use adds another layer to this. Experimental data, with controlled randomisation, is usually best for confirming causal effects. On the flip side, observational data can tempt you with correlations but often falls short in clearly establishing cause and effect. Even if you gradually adjust your model to account for various confounders, it’s rarely a perfect fix—just a step in the right direction.

So, how do you manage these inherent risks? It’s all about recognising the limitations and continuously refining your approach. By addressing as many confounding factors as you can, you buy yourself a stronger defensive stance, ensuring that the actionable insights you derive are on solid ground. This isn’t a deal-breaker—rather, it’s a reminder that effective prescriptive modelling is built on a careful balance of optimism and rigour.